Imitation Learning

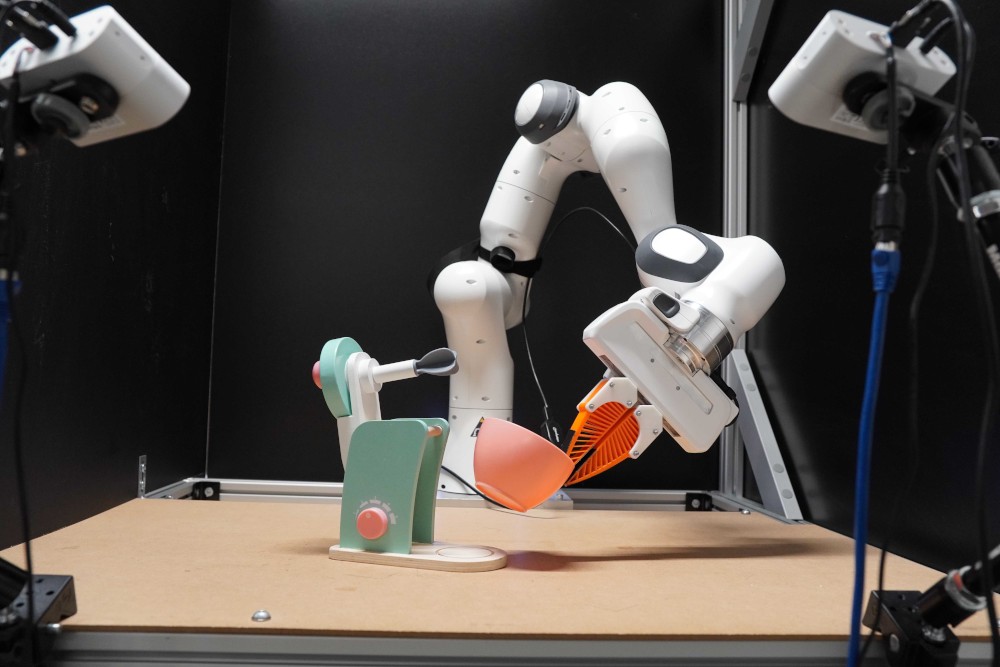

Our group works at the frontier of teaching robots to move and reason by observing human expertise. We specialize in scaling imitation learning across a vast spectrum of complexity from high-precision Diffusion Policies for specialized motor skills to large-scale Vision-Language-Action (VLA) models that bridge the gap between semantic understanding and physical execution.

We believe that for robots to move beyond simple automation, they must possess a deep, three-dimensional understanding of their environment. To achieve this, we move beyond 2D representations, integrating multi-modal inputs like Point Clouds to give our models the spatial awareness required for delicate, contact-rich manipulation.

Our research is grounded in the physical world. By deploying our methods on robots in both simulation and reality, we push the limits of long-horizon tasks - turning complex human demonstrations into robust, autonomous behaviors that can handle the unpredictability of real-life environments.

Key Areas

- Multi-Modal Behavior Cloning

- 3D Point Clouds

- Vision-Language-Action Models

Members

Recent News

New Humanoid Robots

We have three new humoid robots from Unitree available for research:

- Unitree H1-2: Human-sized humanoid, primarily for manipulation tasks

- Two Unitree G1: Child-sized humanoid for locomotion and mobile manipulation tasks

Autonomous Learning Robots

Autonomous Learning Robots